Discover why the success of AI implementation depends more on people than technology. Learn how to build trust, overcome resistance, and drive successful human-centric AI adoption within your organization.

What You’ll Learn

- Why employees resist new AI tools and how to overcome it

- How to build trust and transparency around AI systems

- A 3-phase framework for human-centric AI adoption

- Steps to create ownership, drive advocacy, and scale adoption

- The essential AI readiness checklist for lasting transformation

Look around your organization. Your workforce is amazing. You have a mix of people from their early 20s all the way to their 60s. Everyone brings a different background, culture, and perspective.

And whenever a major new piece of technology comes along, the biggest challenge isn’t just installing it but getting all these different people to be open to change.

This isn’t new. Think about it. We’ve been through this before. We moved from writing attendance in a big notebook to punch cards and now to face detection. Each time technology changed and we learned. We adapted.

But with AI, it’s different. This feels bigger. The speed at which AI is changing everything is incredible. And honestly, it’s generating a lot of fear. People are worried, and that’s understandable.

Today, as a leading AI development company, we want to talk about a major problem you might face, even if you have a brilliant AI solution ready to go.

It’s called the “People Part of AI”. And it’s something you can’t afford to ignore, like just swiping away another notification on your phone. This one needs your full attention.

Table of Contents

Why Your Team Might Secretly Hate Your New AI?

So, why does this particular technology (AI) feel so different from switching from a notebook to a computer?

It’s because, deep down, we all understand that AI isn’t just a helper; it feels more like a colleague. And a very smart, very fast one at that. Let me break down the two biggest fears I see in organizations:

-

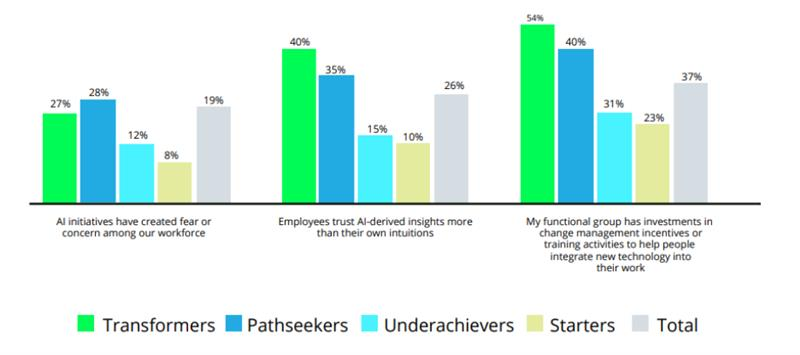

The “Black Box” Fear

A primary barrier is the “black box” problem. Professionals require understanding to trust. When AI systems deliver conclusions without transparent, interpretable reasoning, they fail to establish credibility with the experts whose work they aim to support.

Source: Deloitte

This lack of explainability forces a choice between the system’s output and an employee’s accumulated experience. This results in the rejection of the tool. Trust is not automatically granted. It must be earned through clarity and justification.

-

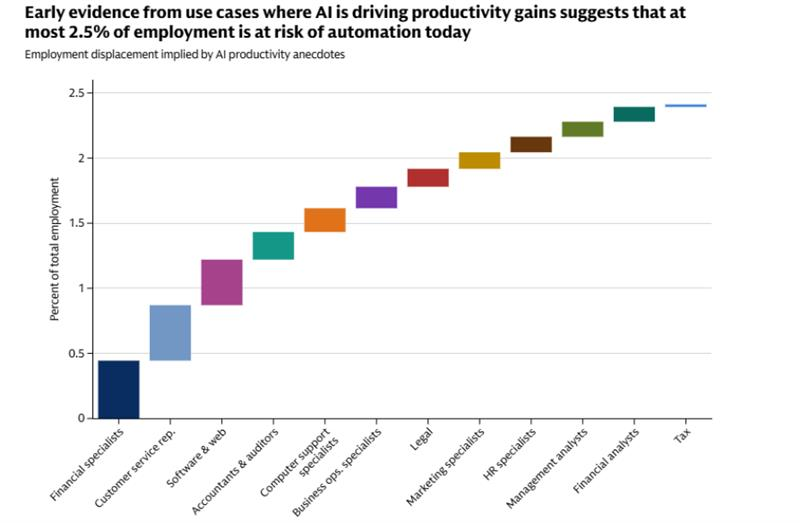

The “My Job” Fear

Beyond trust issues, AI agent development can be perceived as an existential threat to an employee’s role. The language of automation and efficiency is often internally translated into a narrative of redundancy.

Source: Goldman Sachs Research

Employees may fear that their hard-won expertise is being devalued. This can lead to a crisis of professional identity. This is not simple resistance to change, but a rational response to a perceived devaluation of core skills and a threat to long-term job security.

The Strategic Framework for Human-Centric AI Adoption

The ultimate measure of a successful AI implementation is not its technical elegance. It is the smooth AI integration into the daily workflows and decision-making processes of an organization.

The chasm between a functioning tool and a fully adopted one is where most projects falter. This failure is rarely a technology issue and more a cultural and operational one.

To cross this chasm, a phased framework that prioritizes human factors is essential. The following methodology ensures that AI solutions are not just deployed but embraced and leveraged for maximum impact.

Phase 1: Foster Ownership Through Co-Creation

The traditional approach of developing a solution in isolation and “rolling it out” to end-users is fundamentally flawed. It creates a disconnect between the tool’s capabilities and the users’ actual needs. This brings skepticism and resistance from the outset.

The goal of this phase is to shift the internal narrative of the AI solution from a tool imposed by leadership to a solution we built together. Here is an actionable strategy you can follow.

a. Constitute a Cross-Functional Advisory Council:

Move beyond a simple feedback group. Form a council that is a microcosm of your organization, deliberately including influential skeptics alongside early adopters. The inclusion of skeptical voices is a critical risk mitigation strategy as their objections will reveal the most significant adoption barriers.

b. Integrate into Agile Development:

This council should be a core part of the sprint cycles. Present them with low-fidelity prototypes and wireframes, not just polished demos. Their role is to stress test the user journey and validate that the solution addresses genuine pain points within their existing workflows.

c. Demystify the Technology:

Use these sessions to transparently explain the AI’s logic in accessible terms. When a user understands the “why” behind a recommendation, it ceases to be a mysterious “black box” and becomes a trusted advisor. This transparency is the foundation of trust.

d. Close the Feedback Loop

Crucially, when user suggestions are implemented, communicate this back to the council. This demonstrates that their input has tangible value, reinforcing their psychological ownership of the project.

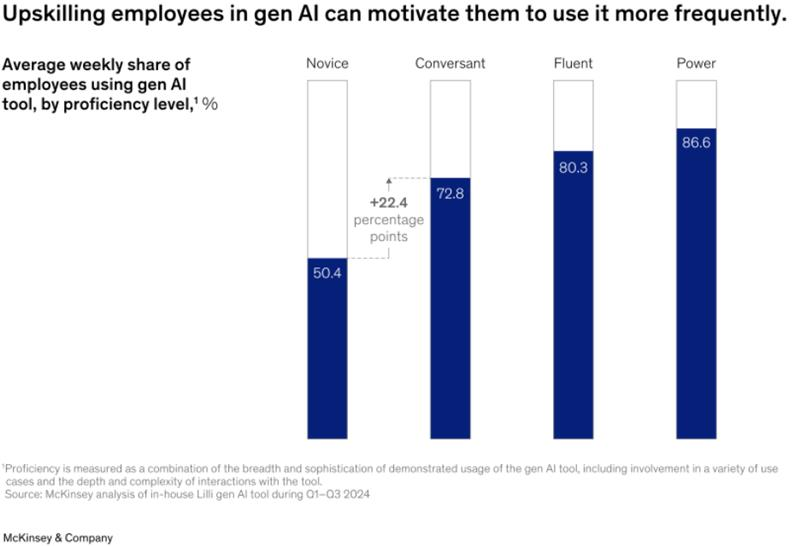

Source: McKinsey & Company

Phase 2: Validate and Refine with a Strategic Pilot

An organization-wide launch is a high-stakes gamble that often overwhelms support structures and amplifies initial hiccups into perceived systemic failures. A strategic pilot program serves as a controlled environment to validate the tool’s value proposition, refine its functionality, and generate undeniable, internal proof.

a. Select a Representative Pilot Pod:

You should identify a single team or business unit whose work is representative of broader organizational challenges. The selection criteria should be based on their potential to generate measurable outcomes and the presence of a respected team leader.

b. Frame the Mission Strategically:

Position the pilot not as a “test,” but as a “leadership initiative.” The pod’s mission is to co-evolve the tool and create a playbook for the rest of the organization. This framing instills a sense of purpose and responsibility.

c. Provide Embedded, Agile Support:

For a period of 4-6 weeks, provide the pilot team with immediate and dedicated support. This allows for real-time troubleshooting and iterative improvements which ensure the tool is finely tuned to their specific context. This level of responsiveness builds confidence and demonstrates organizational commitment.

d. Document Tangible Value and Narrative Assets:

The primary output of this phase is not a technical report, but a portfolio of evidence. Quantify efficiency gains, error reduction, or revenue impact. More importantly, capture the qualitative, narrative assets: video testimonials, case studies, and specific anecdotes of how the tool solved a previously intractable problem.

Phase 3: Drive Scale Through Organic Advocacy

The final phase of adoption cannot be forced through a mandate. It must be catalyzed. Scaling success depends on creating a pull effect, where demand for the tool organically outpaces its deployment. This is achieved by leveraging the most powerful marketing channel available: peer-to-peer advocacy.

a. Empower Peer Evangelists:

Transition the role of “trainer” from the implementation team to the pilot pod members. Facilitate sessions where they can share their firsthand experiences and demonstrable results in their own language. Their credibility is your most valuable asset.

b. Create Observable Value in Context:

Organize “lunch and learn” demonstrations or shadowing opportunities where curious employees can see the tool in action within a realistic work context. This makes the benefits tangible and relatable which moves beyond abstract features to concrete utility.

c. Cultivate a Community of Practice:

As adoption grows, establish formal or informal channels where users can share tips, best practices, and novel use cases. This fosters a sense of community and continuous learning around the tool.

d. Incentivize and Recognize Adoption:

Publicly recognize teams and individuals who are achieving standout results with the AI. This not only rewards early adopters but also creates healthy competition and showcases the art of the possible.

The AI Adoption Readiness Checklist

Wait. Don’t launch your AI initiative directly. Use this strategic checklist to make sure your organization is prepared for the human element of change. These steps are very important for moving from mere implementation to genuine adoption and value realization.

Phase 1: Foundation & Strategy

- Secure Active Executive Sponsorship: Confirm a C-suite leader is publicly championing the initiative and allocating necessary resources.

- Form a Cross-Functional Implementation Team: Assemble a team with representatives from IT, HR, change management, and key business units.

- Define Success Metrics: Establish clear KPIs focused on business outcomes and user adoption, not just technical performance.

- Develop a Comprehensive Communication Plan: Create a multi channel plan that clearly articulates the “why,” benefits, and impact for all stakeholder groups.

Phase 2: Mobilization & Enablement

- Identify and Empower Champions: Recruit influential early adopters across departments and equip them with tools and knowledge to advocate for the AI.

- Create Role-Specific Training & Resources: Develop tailored training that connects the AI’s functionality to specific daily tasks and workflows for different roles.

- Establish a Robust Support Structure: Set up clear channels to help build user confidence.

Phase 3: Launch & Sustainment

- Implement Continuous Feedback Mechanisms: Create formal and informal channels to gather user feedback for ongoing improvement.

- Execute a Plan for Celebrating Early Wins: Proactively identify and publicly recognize quick wins and success stories to build momentum and demonstrate value.

- Activate Long-Term Adoption Tracking: Monitor usage metrics, sentiment, and business KPIs over time to ensure sustained integration and guide future investments.

Conclusion

The most important takeaway here is that a technically perfect AI solution holds zero value if it remains unused. The sophistication of an algorithm is irrelevant in the face of organizational resistance.

Viewing change management as a secondary concern is the primary reason AI initiatives fail. It is not an optional step. But it is the foundational discipline that bridges the gap between investment and return. It is the crucial work of aligning technology with human behavior.

Ultimately, the organizations that will thrive are not those that simply deploy the most advanced AI. But those who master the integration of both the technical and the human elements. They are the ones who will unlock not just short-term efficiency but a profound and sustainable competitive advantage.