A practical, execution-focused guide to choosing the right agentic AI framework for autonomous customer support. Compare leading frameworks, evaluate technical and business tradeoffs, and avoid costly mistakes before committing to production.

Here’s what you will learn:

- Why framework decisions directly impact production speed and long-term scalability

- A side-by-side comparison of the top 10 agentic AI frameworks

- What to look for in model integration, tools, memory, orchestration, and control flow

- A practical 5-step framework selection process you can implement immediately

Every technical decision has consequences, but framework choices are particularly unforgiving. Choose well, and your agentic AI development reaches production in weeks. Choose poorly, and you’ll spend months untangling yourself from the wrong abstraction.

When you are building autonomous customer support systems, your Agentic AI framework is the foundation for everything else that is built on. This guide will help you choose that foundation wisely.

Table of Contents

The Agentic AI Shift: What the Numbers Say

Before we dive into frameworks, here’s where this technology is heading.

The Opportunity

Agentic AI is poised to handle 68% of customer service interactions by 2028 (Cisco). By 2026, 40% of all G2000 job roles will involve working with AI agents (IDC).

If you’re handling 100,000 monthly interactions today, that’s 68,000 automated within a few years. The cost savings and scalability gains are substantial.

The Reality Check

Gartner predicts over 40% of agentic AI projects will be canceled by end of 2027.

Why? Building production-ready autonomous agents is harder than demos suggest. Edge cases, costs, reliability, and actual business value are challenging to deliver.

What This Means

The 68% automation rate is real and achievable. But getting there requires smart framework choices and solid execution.

Your framework choice matters because execution matters. Let’s make sure you choose wisely.

Top 10 Agentic AI Frameworks Comparison

Here’s a comprehensive comparison table of the top 10 Agentic AI frameworks:

| Framework | Best For | Model Support | License | Maturity |

| LangChain/LangGraph | Developers wanting comprehensive ecosystem | OpenAI, Anthropic, Cohere, HuggingFace, local models | MIT | High |

| AutoGen | Multi-agent collaboration and complex workflows | OpenAI, Azure OpenAI, local models via LiteLLM | Apache 2.0 | Medium |

| CrewAI | Role-based team structures | OpenAI, Anthropic, local models | MIT | Medium |

| Semantic Kernel | Enterprise .NET/C# environments | OpenAI, Azure OpenAI, HuggingFace | MIT | Medium-High |

| Haystack | Production NLP pipelines | OpenAI, Anthropic, Cohere, HuggingFace, local | Apache 2.0 | High |

| LlamaIndex | Knowledge base integration and RAG | OpenAI, Anthropic, local models, all major providers | MIT | High |

| Langroid | Clean, Pythonic multi-agent systems | OpenAI, Azure, local models | MIT | Low-Medium |

| AGiXT | Low-code/no-code agent building | Multiple providers via plugin system | MIT | Low-Medium |

| Swarm (OpenAI) | Lightweight agent handoffs | OpenAI only | MIT | Low (Experimental) |

| Custom (Direct API) | Maximum control and minimal dependencies | Your choice | N/A | Depends on you |

Note: This landscape evolves rapidly. Framework positions, features, and maturity levels change quarterly.

What Makes Customer Support Ideal for Autonomous Agents?

AI agent in customer support is arguably the ideal use case of this powerful tech. Here’s why the economics and structure of support make it uniquely suited for autonomous agents.

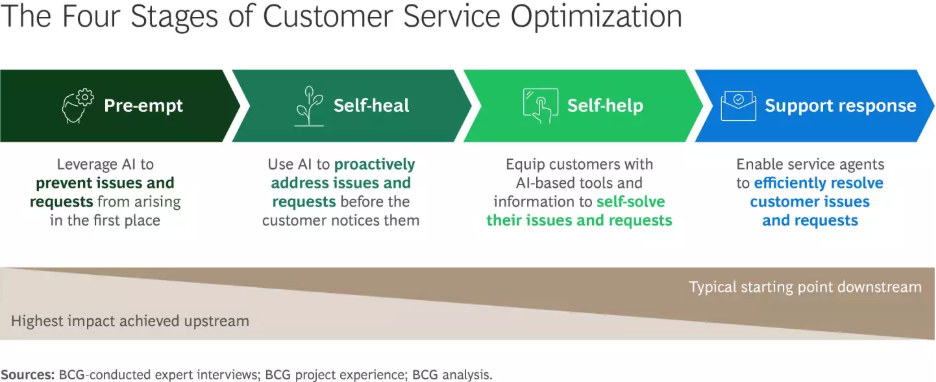

Source: BCG

-

High-Volume Repetitive Operations

Most support teams handle thousands of monthly tickets, with 60-70% falling into predictable categories: password resets, order tracking, product questions, refund requests, account updates. These are not creative problems but predictable.

One agentic AI workflow handles hundreds of simultaneous interactions, 24/7, with zero fatigue and perfect consistency. The alternative is hiring proportionally to volume, which doesn’t scale economically.

-

Quantifiable Success Metrics

Here is what makes customer support different from a lot of AI applications: you can actually measure whether it’s working. There’s no ambiguity, no subjective judgment calls.

You have got resolution rate (how many tickets get fully resolved without a human stepping in), time to resolution (agents work in seconds instead of hours), CSAT scores (are customers actually happy with the experience), and cost per ticket (the direct financial impact).

-

Structured Domain Constraints

Customer support operates inside clear boundaries and that makes autonomous agents far more reliable. Your agents aren’t trying to write creative content or solve open-ended problems.

They are working within your product catalog, your return policies, your knowledge base, and your CRM system.

This structure matters because it reduces the risk of hallucinations and unreliable outputs. The agent is executing well-defined tasks using verified data. You can give it specific tools, constrain what actions it can take, and validate everything it does.

Support tickets generally have right answers. Your job is making sure your framework helps agents find those answers consistently.

How to Evaluate Agentic AI Frameworks for Customer Support?

Now that you understand why customer support is such a strong fit for autonomous agents, let’s talk about how to actually evaluate your options. Not every framework is built the same, and the differences matter when you’re trying to ship something that works in production.

I have organized this into four main areas: technical requirements, production readiness, developer experience, and business considerations.

Some of these will matter more to you than others depending on your situation, but it’s worth thinking through all of them before you commit.

1. Technical Requirements

a. Model Integration

One of the first questions you need to answer is which language models does the framework support? Some frameworks lock you into a specific provider like OpenAI, while others let you work with Anthropic’s Claude, open-source models, or pretty much anything you want.

This matters more than you might think. Model capabilities are evolving fast, and pricing structures change.

If you build everything around one provider and can’t easily swap to another, you’re stuck. Look for frameworks that make model switching straightforward, ideally just a configuration change rather than a code rewrite.

Also consider whether you’ll need fine-tuning capabilities or retrieval-augmented generation (RAG) for your knowledge base. Not every framework handles these equally well.

b. Tool and Integration Capabilities

Your autonomous agents need to actually do things, which means they need to connect with your existing systems.

Does the framework come with pre-built integrations for common tools like CRMs, ticketing systems like Zendesk or Freshdesk, or knowledge bases?

Pre-built integrations save you weeks of development time. But you’ll also inevitably need custom tools specific to your business.

How easy is it to build those? Can you create a new tool in an afternoon, or does it require diving deep into framework internals?

Pay attention to how the framework handles API connectivity and authentication. If you’re connecting to five different systems, each with their own auth requirements, you want a framework that makes this manageable rather than a nightmare.

c. Memory and State Management

Customer conversations aren’t isolated events. An agent needs to remember what happened two messages ago (conversation context), what happened last month (customer history), and be able to pick up where it left off if something crashes (session persistence).

How does the framework handle conversation context as interactions get longer? Some frameworks struggle when context windows grow, leading to degraded performance or lost information.

Long-term memory is trickier. Your agent should know that this customer has called three times about the same issue, or that they’re a VIP account. Does the framework provide built-in mechanisms for this, or are you building it from scratch?

Session persistence matters for reliability. If your system restarts or a connection drops, can the agent recover gracefully, or does the customer have to start over?

d. Orchestration and Control Flow

This is where frameworks really differ. Customer support workflows are rarely simple. Resolving a refund request might involve checking order status, verifying return eligibility, initiating the refund, updating the CRM, and confirming with the customer.

How does the framework handle multi-step workflows like this? Can it manage complex logic where the next step depends on what happened in the previous one? Some frameworks make this intuitive. Others turn it into a tangled mess of callbacks and state management.

Error handling is critical. APIs fail, timeouts happen, external systems go down. Does the framework have built-in retry logic? Can you define fallback strategies? Or does every error require you to write defensive code?

Conditional branching based on customer inputs should feel natural. If a customer says they want to speak to a human, your agent needs to recognize that and route accordingly. The framework should make these kinds of decisions straightforward, not an engineering project.

2. Production Readiness

a. Scalability

Building a demo that works for ten conversations is different from running a system that handles thousands simultaneously. How does the framework perform under real load?

Look at concurrent conversation handling. Can it manage hundreds or thousands of active sessions without degrading? What’s the latency like when you’re at peak volume versus when things are quiet?

Resource consumption directly impacts your costs. How much does each interaction cost when you factor in compute, memory, and LLM API calls? A framework that’s inefficient can turn a profitable automation into a money pit.

b. Observability

You can’t improve what you can’t see. When something goes wrong (and it will), can you figure out why? Does the framework provide comprehensive logging that shows you what the agent was thinking and doing at each step?

Debugging agent decision-making is particularly important. If your resolution rate suddenly drops, you need to understand whether it’s a prompt issue, a tool failure, or something else entirely. Some frameworks give you full visibility into the agent’s reasoning process. Others are black boxes.

Performance analytics should be built in. You want dashboards showing resolution rates, average handling time, error rates, and cost per conversation without having to build your own instrumentation layer.

c. Reliability

Production systems fail. The question is how they fail and whether they recover gracefully. Does the framework have well-defined failure modes, or does it crash unpredictably?

Rate limiting and quota management matter when you’re working with LLM APIs that have usage caps. Does the framework handle this automatically, or will you hit limits and start dropping customer conversations?

Fallback strategies are your safety net. If the primary LLM is down or too slow, can the framework switch to a backup? If an integration fails, does it degrade gracefully or just break?

d. Security and Compliance

If you’re handling customer data, this isn’t optional. What data privacy controls does the framework provide? Can you ensure that sensitive information doesn’t get logged or sent to places it shouldn’t go?

PII handling is especially important. Customer support conversations are full of personally identifiable information. Does the framework help you identify and protect this data, or is that entirely on you?

Audit trails become critical if you’re in a regulated industry. Can you prove who accessed what data and when? Do you have complete records of agent actions?

Look for frameworks that support compliance standards like SOC 2 or GDPR if those matter for your business. Building compliance on top of a framework that wasn’t designed for it is painful.

3. Developer Experience

a. Learning Curve

How long does it take to get productive with this framework? Is the documentation actually helpful, or is it incomplete and outdated?

Community size and activity matter more than you might think. When you hit a problem at 2 AM, can you find answers on Stack Overflow or GitHub discussions? Or are you the first person to encounter this issue?

Available tutorials and examples make a huge difference. Can you find working code that does something similar to what you’re building, or are you piecing things together from scattered docs?

b. Development Workflow

Can you test your agents locally before deploying to production? This sounds obvious, but some frameworks make local development difficult or impossible.

Prompt engineering is a huge part of building effective agents. Does the framework provide tools that help you iterate on prompts and see results quickly? Or is it a cycle of deploy, test, redeploy?

Version control and deployment should be straightforward. Can you track changes to your agent logic? Can you roll back if something goes wrong? How painful is the deployment process?

c. Customization

Every customer support operation is different. How flexible is the framework when you need to adapt it to your specific needs?

Extension points and plugin systems determine whether you can add capabilities without forking the entire framework. Can you extend the framework’s behavior in supported ways, or are you hacking around limitations?

Lock-in concerns are real. If you invest six months building on a framework, how hard is it to migrate to something else if your needs change? Some frameworks make this easier than others.

4. Business Considerations

a. Cost Structure

What will this actually cost you? Framework licensing varies widely. Open-source options are free but might lack enterprise features or support. Commercial frameworks charge licensing fees but often provide capabilities that would be expensive to build yourself.

LLM API costs often dwarf framework costs. A framework that makes excessive API calls can cost you thousands of dollars monthly even if the framework itself is free.

Infrastructure requirements add up. Does the framework need specific cloud services? Special databases? Compute resources that make your AWS bill explode?

b. Time to Value

How quickly can you build a proof of concept that demonstrates whether this approach will work? Some frameworks let you build something functional in a day. Others require weeks of setup before you can test anything.

The path from prototype to production varies dramatically. Some frameworks are designed for production from day one. Others are great for demos but fall apart when you try to scale.

c. Vendor Support

Do you need commercial support? If something breaks in production at 3 AM, can you get help? Enterprise support options come with service level agreements, dedicated support engineers, and faster response times.

Enterprise features like SSO, advanced security controls, and compliance certifications often only come with paid tiers. Make sure the framework can grow with your needs.

Roadmap alignment matters for long-term success. Is the framework evolving in directions that help you, or is the vendor focused on use cases that don’t match yours?

Recently our custom AI agent improved peak season sales revenue for a leading travel venture

A Practical Approach to Choosing the Right Agentic AI Framework for Customer Support

Let’s walk through a practical approach that helps you move from “I need to pick something” to “I’m confident this is the right choice for us.”

Step 1: Define Your Use Case Clearly

Start narrow. I mean really narrow. Don’t begin with “we want to automate all of customer support.” That’s too broad and you’ll drown in complexity.

Instead, pick one specific workflow. Password resets. Order status inquiries. Account information updates. Something concrete.

Map out the ideal workflow for that use case. What does the customer ask? What information does the agent need to gather? What systems does it need to access? What actions does it take? What does success look like?

Write this down. Be specific.

Identify every integration point. Which APIs will you need to call? What databases need querying? These integration points often determine which frameworks are even viable.

Define success metrics upfront. What percentage of these requests should the agent handle without human intervention? What’s an acceptable response time? What CSAT score are you targeting? You need these numbers before you start building, not after.

Step 2: Assess Your Team’s Capabilities

Be honest about what your team can actually handle. The most powerful framework in the world doesn’t help if your team can’t use it effectively.

What’s your ML and AI expertise level? Do you have team members who understand language models, prompt engineering, and agent architectures?

Or are you learning this for the first time? Some frameworks assume deep technical knowledge. Others are designed for teams still building that expertise.

A framework that requires weeks of setup might be fine if you’ve got the resources. If you’re one person trying to ship an MVP, you need something faster.

There’s no shame in choosing a simpler framework because it matches your team’s current capabilities. You can always migrate later.

What you can’t do is recover months spent fighting a framework that was too complex for your team’s skill level.

Step 3: Create a Scoring Matrix

Now things get practical. Take those evaluation criteria we discussed and turn them into a decision tool.

List out the criteria that matter most for your use case. Not every dimension carries equal weight.

Weight each criterion based on your priorities. Use a simple scale like 1 to 3, where 3 means “absolutely critical” and 1 means “nice to have.” This forces you to make tradeoffs explicit rather than pretending everything matters equally.

Score each framework you’re considering on each dimension. Use a 1 to 5 scale. Be honest. A framework might have a great community (5) but poor documentation (2). That’s useful information.

Multiply scores by weights and add them up. The math gives you a starting point, but don’t treat it as gospel. The numbers help you see patterns. Maybe Framework A scores highest overall but rates low on your top three priorities. That’s worth noticing.

Here’s a simple template to get started:

| Criteria | Weight | Framework A | Framework B | Framework C |

| Model Integration | 3 | 4 (12) | 3 (9) | 5 (15) |

| Tool Capabilities | 3 | 5 (15) | 4 (12) | 3 (9) |

| Observability | 2 | 3 (6) | 4 (8) | 2 (4) |

| Learning Curve | 2 | 2 (4) | 4 (8) | 3 (6) |

| Cost | 1 | 4 (4) | 3 (3) | 5 (5) |

| Total | 41 | 40 | 39 |

The framework with the highest score isn’t automatically the winner. But the exercise forces you to articulate what you care about and how different options stack up.

Step 4: Build POCs with Your Top 2-3 Candidates

Numbers and research only get you so far. At some point, you need to write code.

Take your top two or three frameworks from the scoring exercise and build the same use case in each.

Use the same narrow use case you defined in Step 1. This keeps the comparison fair and focused. You’re not trying to build everything. You’re trying to learn whether this framework helps or fights you.

Measure what matters. How long does it take to get something working? Not just hello world, but an agent that actually connects to your systems and completes the workflow.

How does it perform? Is latency acceptable? Does it handle errors gracefully? Can you debug issues when things go wrong? These questions reveal themselves quickly when you’re actually building.

Test edge cases and failure scenarios. What happens when an API is down? When a customer gives an unexpected input? Production systems encounter these situations constantly. You want to know how each framework handles them before you commit.

Budget a week for this step, maybe two if you’re being thorough. It’s time well spent. The insights you gain from hands-on experience are worth far more than any amount of documentation reading.

Step 5: Evaluate Total Cost of Ownership

The sticker price isn’t the real cost. You need to think through what it actually costs to build, run, and maintain this system over time.

Development costs include the initial build, but also ongoing feature development and maintenance.

A framework that saves you two weeks upfront but requires constant workarounds for basic features will cost you more in the long run.

Infrastructure costs vary wildly. Check what your monthly AWS or cloud bill will look like at scale.

LLM costs often become the biggest line item. A framework that makes ten API calls per conversation versus three makes a real difference when you’re handling thousands of interactions. Do the math on your expected volume.

Agentic AI Maintenance burden is the hidden cost. How much ongoing effort does this framework require? Do updates break things frequently?

Consider the opportunity cost of build versus buy. Could you achieve the same results with a managed service or commercial product? Sometimes building gives you exactly what you need. Other times you’re reinventing wheels that already exist.

Add everything up over a realistic timeframe. What does Year 1 look like? Year 2? The cheapest option upfront isn’t always the cheapest option long-term.

Common Mistakes to Avoid When Choosing the Agentic AI Framework

Even teams that do their research and follow a solid decision process can stumble. Here are the mistakes I see repeatedly, and how to avoid them.

-

Overengineering Early

The temptation is real. You’re building an agentic AI system, so it needs to handle every possible scenario, right? Multi-agent orchestration, complex state machines, sophisticated fallback logic for every edge case.

Stop. Start simple.

Pick one workflow. Build the straightforward path. Get it working. Then add complexity only when you actually need it.

-

Ignoring Observability

You can’t improve what you can’t measure. This applies to your agents just as much as any other system. Without proper logging and monitoring, you’re flying blind.

Build observability in from day one. Log agent decisions. Track performance metrics. Make it easy to replay conversations and understand what went wrong. The frameworks that make this easy will save you countless hours of frustrated debugging.

-

Underestimating Prompt Engineering Effort

Here’s what many teams don’t realize until they’re deep into implementation: prompt engineering is often 50% or more of the work.

Getting the LLM to consistently do what you want, handle edge cases gracefully, and maintain the right tone takes iteration.

Budget time for prompt engineering. Expect to spend days or weeks refining prompts even for straightforward workflows. And choose a framework that makes this iteration cycle as fast as possible.

-

Neglecting Human Escalation Paths

Autonomous doesn’t mean zero human involvement. It means humans focus on what they’re actually good at while agents handle the repetitive stuff.

Your agents will encounter situations they can’t handle. Like angry customers who want to speak to a person. Complex problems requiring judgment.

Build clear escalation paths from the start. Define when agents should hand off to humans. Make those handoffs smooth with full context transfer. Ensure your human agents can see what the autonomous agent already tried.

-

Choosing Based on Hype

The newest framework gets a lot of attention. It’s trending on X. Everyone’s talking about it. It must be the best choice.

Not necessarily.

New frameworks are exciting because they try novel approaches. But they’re also less battle-tested, have smaller communities, and change frequently

Sometimes the new framework really is better and worth the risk. Often, a more mature option that’s less exciting but more proven is the smarter choice.

Evaluate based on your needs, not the hype cycle. The framework that’s boring but works reliably beats the framework that’s exciting but breaks in production.

-

Forgetting About Data Quality

You can have the most sophisticated agentic AI system in the world, but if it’s working with outdated documentation, incomplete product information, or contradictory policies, it will give wrong answers. Consistently.

Before you get deep into framework selection, audit your knowledge base. Is your documentation current? Is it complete? Is it written in a way that an AI agent can actually use?

Which is the Best Agentic AI Framework?

We’ve covered a lot of ground. Now let’s bring it home with practical recommendations based on who you are and what you’re trying to accomplish.

-

If You’re a Startup or Building an MVP

Recommendation: Start with CrewAI or a lightweight LangChain implementation

You need to move fast and prove the concept works. You don’t have time to fight complex abstractions or build everything from scratch. CrewAI gives you the quickest path to a working prototype.

If you know you’ll need extensive integrations down the road, consider a focused LangChain implementation instead.

What matters most at this stage is learning whether customers actually want to interact with an autonomous agent and whether it can handle your workflows effectively.

Pick the framework that gets you to those answers fastest. You can always migrate later if needed.

-

If You’re an Enterprise

Recommendation: Semantic Kernel if you’re in the Microsoft ecosystem, otherwise LangChain with proper governance

If you’re already deep in the Microsoft world with Azure infrastructure, Semantic Kernel is the natural choice.

Outside the Microsoft ecosystem, LangChain’s maturity and ecosystem make it the safer bet despite the complexity.

-

If You’re a Non-Technical Leader

Business-focused guidance for making this decision:

The framework choice matters. But it matters less than having a clear strategy and the right team.

Focus on business outcomes first. What percentage of tickets do you need to automate to justify the investment? What cost per interaction makes this profitable? Answer these questions before worrying about technical details.

Trust your technical team to make the framework decision but ensure they’re thinking about the full picture.

Budget appropriately. The framework might be free, but building and running autonomous agents isn’t.

Account for LLM API costs, infrastructure, development time, and ongoing maintenance. A rough rule of thumb: if you’re handling 50,000 monthly tickets, expect to spend between $10,000 and $50,000 monthly on LLM costs alone, depending on your efficiency.

Consider the talent market. Can you hire developers who know this framework? Can your existing team learn it, or you need an AI agent development partner?

Final Thoughts

Here’s what I want you to remember when you close this tab and start making decisions:

The framework matters less than execution. Your framework is a tool. What matters is what you build with it.

Focus on the customer experience, not the technology. Your customers don’t care whether you’re using LangChain or AutoGen or something custom. They care whether their problem gets solved quickly and accurately.

Build agents that genuinely help people. Make escalation to humans smooth and respectful. Measure what matters: resolution rates, customer satisfaction, real business impact.

The best framework is the one that helps you deliver that experience most effectively for your specific situation.

Start small, measure everything, iterate relentlessly

This approach works regardless of which framework you choose. It also helps you figure out quickly if you chose wrong and need to pivot.

The landscape will keep changing. That’s okay. Make the best decision you can with current information. Build in ways that make it possible to adapt as things change. Don’t optimize for a future you can’t predict.

Your Next Steps

If you’re ready to move forward:

- Define your first use case specifically

- Score 2-3 frameworks against your priorities

- Build a POC with each leading candidate

- Pick the one that feels right based on actual experience

- Ship something small and learn from it

If you’re still uncertain, that’s fine too. Spend more time with the evaluation criteria. Talk to AI automation teams who’ve shipped similar systems. Build more POCs.

The teams that succeed with autonomous customer support aren’t the ones who made the perfect framework choice. They’re the ones who made a reasonable choice and executed well.

You can do this. Now go build something that helps your customers.

Frequently Asked Questions

What are agentic AI frameworks?

Agentic AI frameworks are software tools that help you build autonomous agents that can pursue goals, make decisions, and take actions on their own rather than just responding to prompts. They provide the infrastructure for connecting to external tools, managing conversation memory, orchestrating multi-step workflows, and handling errors. Without a framework, you’d be building all of this from scratch every time.

What are the most popular agentic AI frameworks?

LangChain/LangGraph dominates with the largest community and most integrations, though it’s complex. AutoGen (Microsoft) is strong for multi-agent collaboration, while CrewAI offers a simpler, role-based approach that’s easier to learn. Semantic Kernel is the enterprise choice, especially in Microsoft/Azure environments.

What are the key architectures in agentic AI frameworks?

Most frameworks use ReAct (Reasoning and Acting), where agents think, act, observe, and repeat until goals are achieved. Multi-agent architectures involve specialized agents working together on different tasks. Pipeline architectures treat workflows as connected steps with data flowing through. The architecture you need depends on your use case. Simple workflows work with basic ReAct, while complex scenarios might need multi-agent systems.

What are the main design challenges in agentic AI?

The biggest challenges are reliability (handling API failures and timeouts gracefully), cost management (LLM calls add up fast), and observability (understanding why agents make certain decisions). Prompt engineering at scale is harder than expected, and managing state across conversations gets complex. Security, data privacy, and smooth human-AI handoffs require careful design. Some frameworks make these challenges easier to solve than others.

What protocols do agentic AI frameworks use?

Most frameworks don’t use a single standardized protocol. They connect to various systems using whatever those systems support. For LLMs, they use REST APIs from providers like OpenAI or Anthropic. For tools, REST APIs are most common, often with function calling for structured tool use. There’s no universal “agentic AI protocol” yet, so each framework does things its own way, which makes switching harder but allows for innovation.